Tiny autoencoders are effective few-shot generative model detectors

Abstract

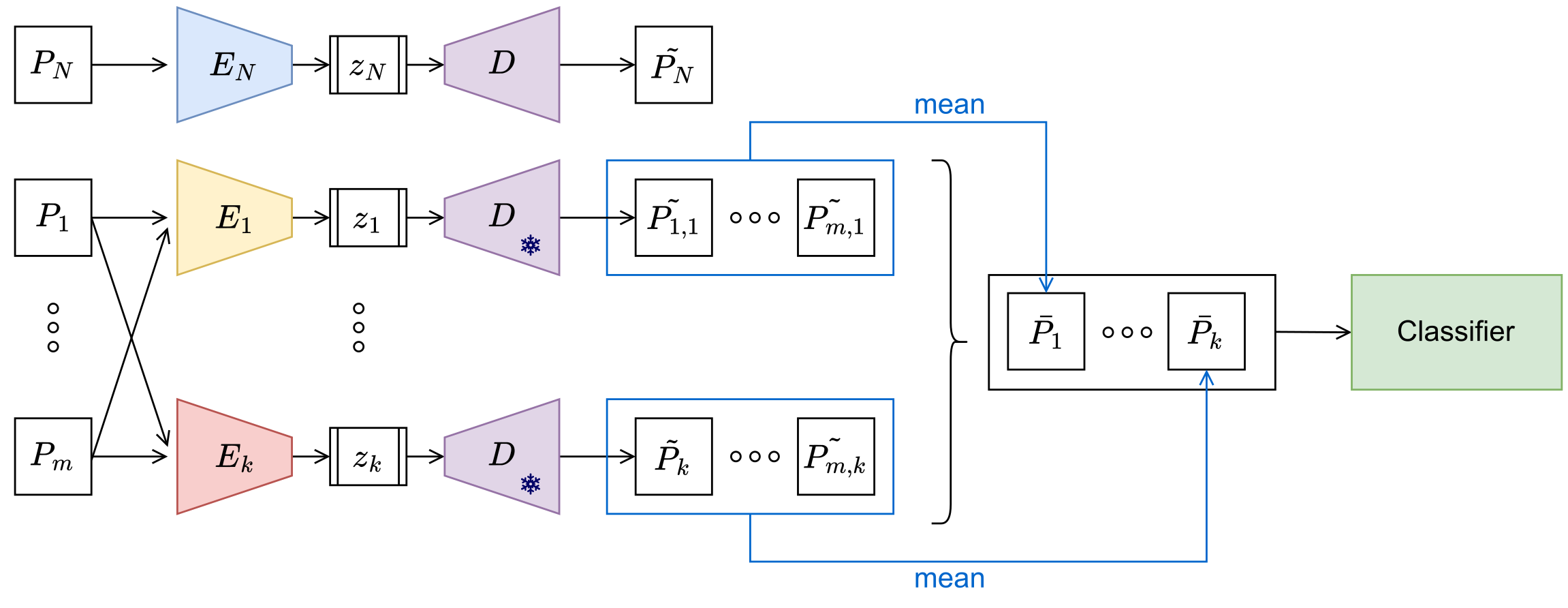

The development of generative AI techniques such as Generative Adversarial Networks and Diffusion Models has made it accessible to create images, often extremely realistic, that do not represent reality. This capability has been exploited on multiple occasions by malicious actors to spread propaganda and fake news online. To trace the origin of generated content, the multimedia forensics community has developed techniques capable of identifying the specific model used to generate the content. However, these techniques often require access to the model in question or large quantities of images generated by it, two conditions that are frequently unattainable. In this paper, we show that tiny autoencoders can be effectively used as few-shot detectors capable of identifying a generative model using a small number of training images. Moreover, we show how this technique can be easily adapted in time to add new models to the attribution system, enabling its use in an incremental class scenario. Experiments demonstrate that the proposed technique is more effective than existing methods in all tested few-shot scenarios, proving its efficacy in situations where large training datasets are not available.

BibTeX

@inproceedings{bindini2024,

title={Tiny autoencoders are effective few-shot generative model detectors},

author={Bindini, Luca and Bertazzini, Giulia and Baracchi, Daniele and Shullani, Dasara and Frasconi, Paolo and Piva, Alessandro},

booktitle={2024 IEEE International Workshop on Information Forensics and Security (WIFS)},

pages={1--6},

year={2024},

organization={IEEE},

doi={10.1109/WIFS61860.2024.10810686}

}

Acknowledgments

This work was supported in part by the Italian Ministry of Universities and Research (MUR) under Grant 2017Z595XS, and in part by the Defense Advanced Research Projects Agency (DARPA) under Agreement No. HR00112090136.